Connected and Autonomous Vehicles

Multi-Source Feature Fusion for Object Detection Association in Connected Vehicle Environments

Intelligent transportation systems (ITS) have been evolving at a rapid pace over the last decade. Many production vehicles come standard with an array of sensors, both inside and outside of the vehicle, that allow the vehicle to perceive what is going on inside the cabin as well as in the area surrounding the vehicle. These sensors enable vehicle safety systems such as the advanced driver-assistance system (ADAS), which can give alerts to the driver about dangers on the road or in some cases even have the vehicle drive itself.

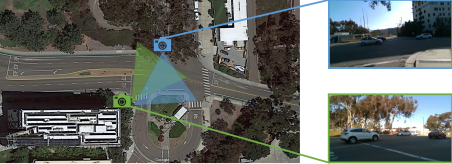

Increased vehicular perception is needed for self-driving vehicles to reach higher levels of automation as well as to improve the safety of vehicles with human drivers. Even the Waymo One [1], which is considered to have level 4 autonomous driving capabilities as defined by the Society of Engineering’s (SAE) 6 levels of driving automation [2], still does not have a perfect perception of its surroundings and can run into problems when it is trying to navigate through the world such as in occlusion scenarios in where an object, e.g. a building or other vehicle, is blocking its sensors from seeing oncoming vehicles. There is no perfect sensor array that will allow the vehicle to see everything; even the most sophisticated combination of sensors will have some gaps in perception due to limitations of the sensors or factors beyond the sensors control. However, by having vehicles communicate with each other and share sensor data, these gaps in perception from one vehicle can be filled in by another. This can be enhanced even further by the inclusion of street infrastructure sensors as well.