Wireless AR/VR

Multi-user Difference Encoding & Hybrid-casting to Address Ultra-high Bandwidth Requirement

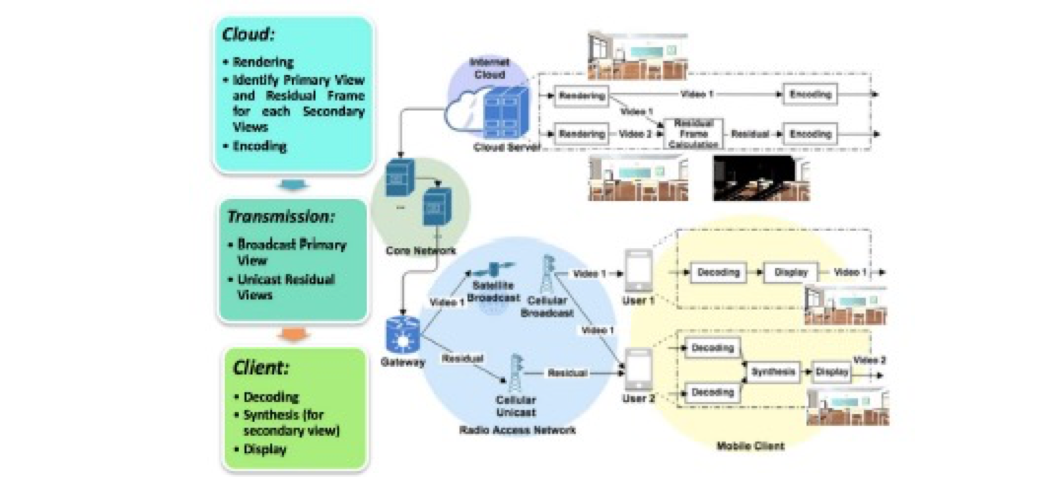

We investigate virtual space applications such as virtual classroom and virtual gallery, in which the scenes and activities are rendered in the cloud, with multiple views captured and streamed to each end device. A key challenge is the high bandwidth requirement to stream all the user views, leading to high operational cost and potential large delay in a bandwidth-restricted wireless network. In a multi-user streaming scenario, we propose a hybrid-cast approach to save bandwidth needed to transmit the multiple user views, without compromising view quality.

Specifically, we define the view of the user which shares the most common pixels with other users as the primary view, and the other views as secondary views. For each secondary view, we can calculate its residual view as the difference with the primary view, by extracting the common view from the secondary view. We term the above process of extracting common view and determining residual views multi-user encoding (MUE). Instead of unicasting the rendered video of each user, we can multicast/broadcast the primary view from the edge node to all the participating users and unicast each residual view to the corresponding secondary user.

We formulate the problem of minimizing the total bitrate needed to transmit the user views using hybrid-casting and describe our approach. A common view extraction approach and a smart grouping algorithm are proposed and developed to achieve our hybrid-cast approach. Simulation results show that the hybrid-cast approach can significantly reduce total bitrate and avoid congestion-related latency, compared to traditional cloud-based approach of transmitting all the views as individual unicast streams, hence addressing the bandwidth challenges of the cloud, with additional benefits in cost and delay.